Robust Pattern Matching performance evaluation dataset

This webpage illustrates the testbed used for a performance evaluation and comparison of robust measures in the context of pattern matching [1]. The aim of the research work presented in [1] was to test and compare, under challenging conditions and within a pattern matching scenario, several measures proposed in literature which are robust towards photometric distortions, partial occlusions and noise. The dataset used for the performance evaluation is publicly available at this page so to allow anyone interested to test and compare his own - as well as others - proposals. All images belonging to the dataset are explicitly affected only by real distortions, in order to recreate the conditions typically found along pattern matching applications. We also plan to extend the currently available dataset with additional images acquired under different conditions.

Description

Currently the testbed includes three image datasets:

- Guitar dataset: this dataset includes 10 images and 7 patterns (extracted from a different image). The patterns were extracted from an image acquired with a good camera sensor (3 MegaPixels) and under good illumination conditions given by a lamp and indirect sun light. The 10 images were taken with a cheaper and more noisy sensor (1.3 MegaPixels, mobile phone camera). Illumination changes were introduced in the 10 images by means of variations of the rheostat of the lamp illuminating the scene (G1-G4), by using a torch light instead of the lamp (G5-G6), by using the camera flash instead of the lamp (G7-G8), by using the camera flash together with the lamp (G9), by switching off the lamp (G10). Furthermore, additional distortions were introduced by slightly changing the camera position at each pose and by the JPEG compression.

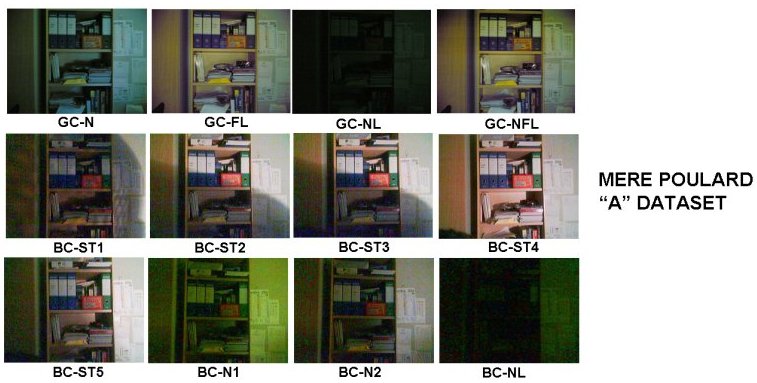

- Mere Poulard A dataset: this dataset includes 12 images and 1 pattern. The image on which the pattern was extracted was taken under good illumination conditions given by neon lights by means of a 1.3 MegaPixels mobile phone camera sensor. The 12 images were taken either with the same camera (prefixed by GC) or with a cheaper, 0.3 VGA camera sensor (prefixed by BC). Distortions are due to slight changes in the camera point of view and by different illumination conditions such as: neon lights switched off and use of a very high exposure time (BC - N1, BC - N2, GC - N), neon lights switched off (BC - NL, GC-NL), presence of structured light given by a lamp light partially occluded by various obstacles (BC-ST1, ... , BC-ST5), neon lights switched off and use of the camera flash (GC-FL), neon lights switched off, use of the camera flash and of a very long exposure time (GC-NFL). Also in this case, images are JPEG compressed.

- Mere Poulard B dataset: this dataset includes 8 images and 1 pattern. The pattern is the same as in dataset Mere Poulard A, while the 8 images were acquired using a 0.3 VGA camera sensor. In this case, partial occlusion of the pattern is the most evident disturbance factor. Occlusions are generated by a person standing in front of the camera (OP1, ..., OP4), and by a book which increasingly covers part of the pattern (OB1, ..., OB4). Distortions due to illumination changes, camera pose variations, JPEG compression are also present.

Together with the image datasets, the ground truth is also provided which allows for a direct comparison of the tested algorithms. In particular, the groundtruth is represented by the coordinate pair (x,y) denoting at each image-template instance the position of the pattern within the image search area. NOTE: The images of the datasets are in general acquired from slightly different poses, this resulting in the objects appearing in the scene having slightly different sizes and slight rotations with regards to the reference patterns. Hence, in a pattern matching task which considers only translations a unique position of the pattern within the image can not be always identified. For this reason, we suggest to always allow for a margin in the coordinates of the best matching position, allowing correct matches being also those found at plus/minus k pixels with respect to the ground truth coordinates (in [1], k was set to 5).

Terms of use and download

Researchers and, in general, anyone interested in this topic is invited to download and use this dataset which comes free and for public use. In case this dataset is used to present any kind of scientific work on a publication, we require to cite [1].

References

| [1] | F. Tombari, L. Di Stefano, S. Mattoccia, A. Galanti, “Performance evaluation of robust matching measures", 3rd International Conference on Computer Vision Theory and Applications (VISAPP 2008), January 22-25, 2008, Funchal-Madeira, Portugal |